Overview

The project I have chosen is an application that uses machine learning to assist doctors in diagnosing COVID-19 on Chest X-rays. As Chest X-rays have always been the preliminary imaging exam for diagnosing COVID-19, the use of technology could not only predict the severity of COVID-19, but also help in the prognosis of the illness. By utilizing Convolutional Neural Networks (CNNs), coupled with large datasets of Chest X-ray images, I would aim to train such an application to recognize and differentiate between a normal Chest X-ray, and someone with COVID-19. Such an application could be used in both hospital and suburban practices, allowing severe COVID-19 patients to be treated as soon as possible.

Motivation

Since its emergence in December 2019, COVID-19 has spread rapidly around the world. As of March 2022, the WHO Coronavirus (COVID-19) Dashboard estimates that there have been over 450 million confirmed cases of COVID-19, with 6 million deaths reported (World Health Organization 2022). The actual number of deaths is predicted to be much higher, due to many countries lacking means of testing and reporting. With hundreds if not thousands of hospitals at full capacity, the need for an application to assist clinicians has never been greater. Not only would it lessen the burden on the healthcare system, it would allow clinicians to provide the best level of care for patients. Sadre et al. (2021) reported on a number of CNNs that were trained for this purpose, with the highest having an accuracy of 98.75%. Whilst this is a very high percentage, it begs the question: What part of the image is causing the 1.25% of cases? This program would aim to improve on the sensitivity and specificity of diagnosing COVID-19. Problems with CNNs relate to the classification of an image, especially when there is variance. This may include factors such as how a patient is positioned for a Chest X-ray, which may not be 100% perfect. By adding a large number of variations of Chest X-rays in different positions, we can use data augmentation to train the network to recognize variance. (Bhuiya 2022)

Description

The product will be an automated application that is installed onto the doctor’s computer. It will be a feature of an image viewing technology such as InteleViewer (InteleViewer 2022). The feature will aim to use machine learning, coupled with imaging databases, in order to help differentiate healthy patients from COVID-19 infected patients. The system would be used such that a Chest X-ray is inputted into the application, and the output is a prediction of whether the image represents a patient with COVID-19. It would utilize subsets of machine learning known as deep learning and CNNs. To understand how the program will help clinicians in categorizing COVID-19 patients, we should start with Machine learning and Deep learning.

Machine learning is a data science method that allows computers to gain knowledge without the need for clear rules. Algorithms are created that take advantage of large data sets, making predictions and improving with experience. Machine learning is split into 3 categories: Supervised learning, Unsupervised learning and reinforcement learning. During Supervised learning, data labels are provided to the algorithm, with expected outputs labelled by experts (i.e. Radiologists), these labels become the Ground Truth for such an algorithm. Ground Truth relates to data that is assumed to be true by the program. The algorithm attempts to learn a general rule that translates inputs to outputs. For the project, the program would be given images of COVID-19 patients and normal patients from a dataset, and the Radiologists would work closely with the engineers to ensure the data extracted by the machine relates to COVID-19. Unsupervised learning differs in that no data labels are given to the algorithm; its job is to figure out the hidden patterns in the data and classify them itself. Reinforcement learning interprets the consequences from multiple interactions as a learning point, being rewarded for correct actions and penalized for incorrect actions. The ideal solution would attempt to maximize the potential reward output. (Bajaj 2021) Our program would aim to use both Supervised and Unsupervised learning, with reinforcement learning as a backup. (Choy et al. 2018)

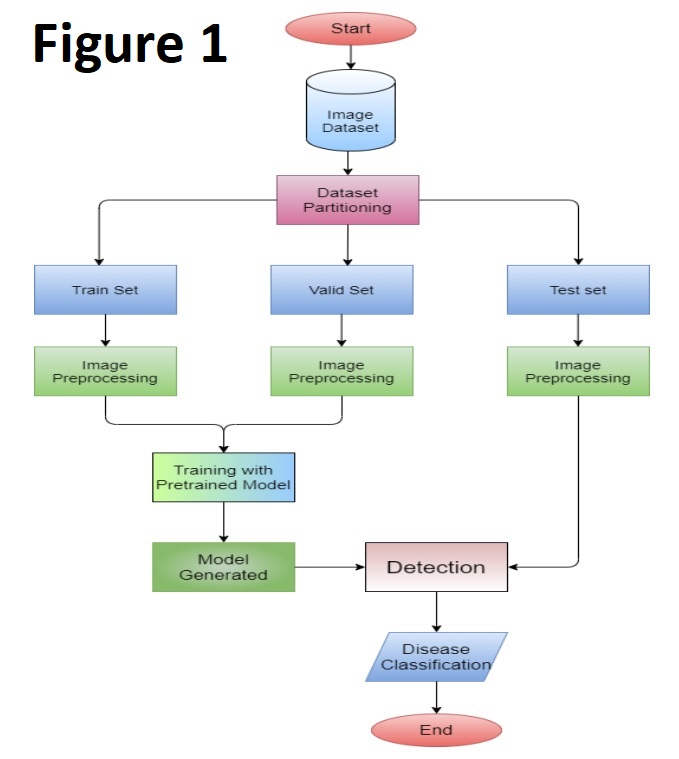

The datasets provided to the program are essential in training it.  There are 3 main data sets that are used: Training, Validation and Testing. Mangeri et al. (2021) summarize how CNNs are trained in Figure 1. The Training set is based on what fits the model best, as the algorithm will learn from examples. The Validation set uses different models with separate data, to adjust the model parameters at hand. Finally, the testing set is used after the algorithm is developed. This set is applied to another dataset, which will assess the accuracy, specificity and performance of the algorithm. (Cheng et al. 2021)

There are 3 main data sets that are used: Training, Validation and Testing. Mangeri et al. (2021) summarize how CNNs are trained in Figure 1. The Training set is based on what fits the model best, as the algorithm will learn from examples. The Validation set uses different models with separate data, to adjust the model parameters at hand. Finally, the testing set is used after the algorithm is developed. This set is applied to another dataset, which will assess the accuracy, specificity and performance of the algorithm. (Cheng et al. 2021)

What are Convolutional Neural Networks? How does it use CNNs to distinguish between a healthy patient and a COVID-19 infected patient?

We understand how machine learning operates, but our system also uses a method known as Deep learning. Deep learning is a subgroup of Machine Learning, and is a hardware or system that is designed to think like a human brain (known as a Neural Network). The network can commonly contain 3 or more layers, and these layers are essential in processing our output to the user. The network allows the application to take in large amounts of data to train it, learn from its mistakes whilst improving on its accuracy as time progresses. As the algorithm is fed through a dataset, the extra neural layers assist in optimizing to a high degree of accuracy (IBM Cloud Education 2020). Models that use Deep learning are classified into 2 categories: Typical networks (that take one-dimensional inputs) or Convolutional Neural Networks (CNN) that take two- and three-dimensional inputs. (Choy et al. 2018)

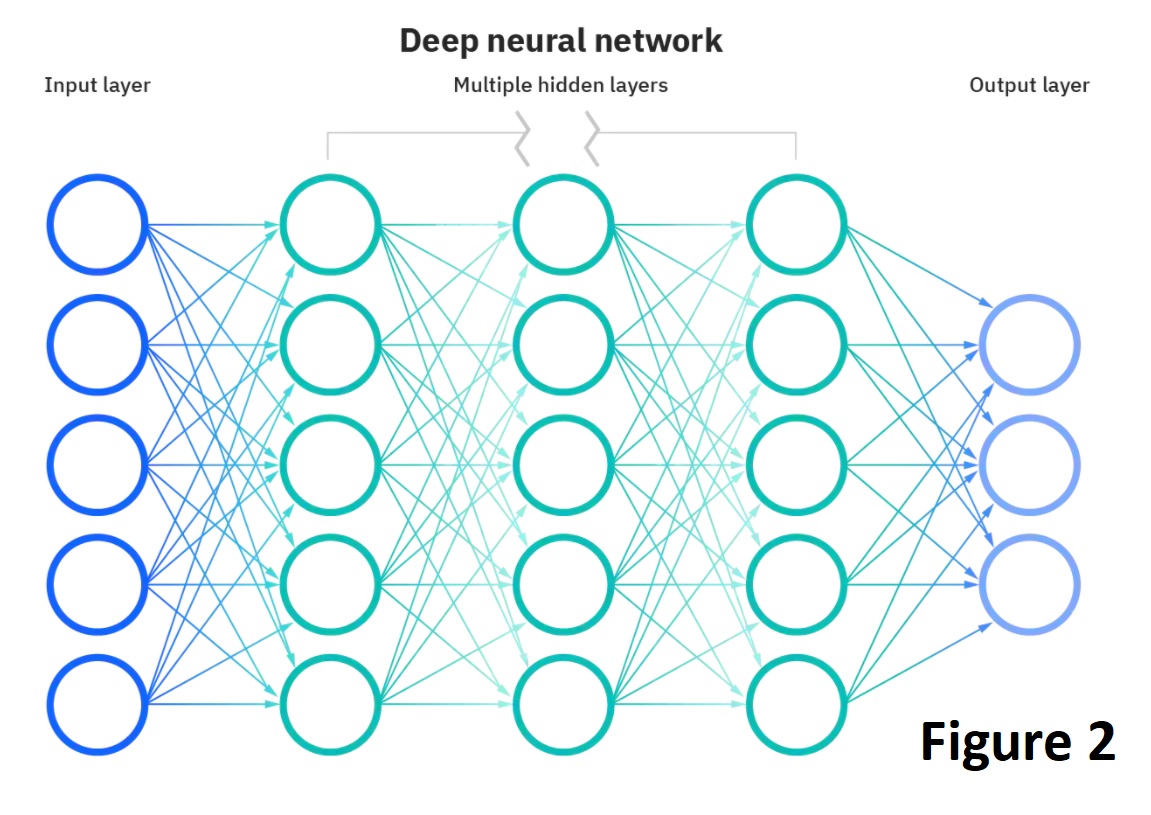

For this program, a CNN is designed and would contain a set of kernels or filters (known as the convolutional layer) that compare input to matrices that encode specific features. The CNN is formed from 3 things: An input stack, an output layer, and multiple hidden layers in-between that filter the inputs to acquire useful data. Figure 2 highlights a typical CNN, produced by IBM Cloud Education (2020). These hidden layers within the CNN usually consist of an ReLU , pooling, convolutional, normalization, fully connected and a SoftMax layer (Uddin et al. 2021). The pooling layers help increase performance output, by reducing the spatial dimensions and avoiding overfitting. A Rectified Linear Unit Layer (ReLU) is important as it ensures nonlinearity as our data progress through each subsequent layer (Low et al. 2021). The normalization layer is also used to avoid overfitting whilst maintaining a high degree of accuracy when classifying data (Rosebrock 2021). Once the data reaches the Fully Connected layer, classification of the features is performed, however, we require it to pass through the final SoftMax layer before we can produce an output. In our program, The SoftMax layer acts as a translator, it will output a percentage based on the values through the CNN. (Wood n.d.)

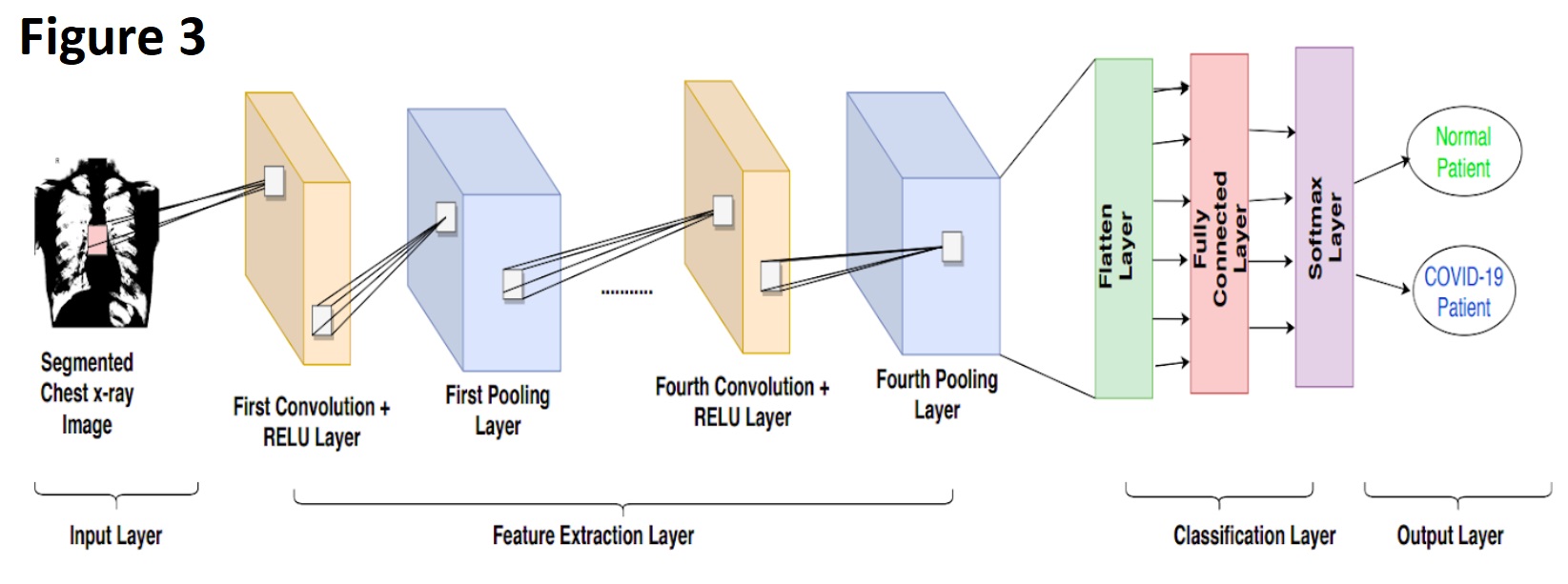

a CNN is designed and would contain a set of kernels or filters (known as the convolutional layer) that compare input to matrices that encode specific features. The CNN is formed from 3 things: An input stack, an output layer, and multiple hidden layers in-between that filter the inputs to acquire useful data. Figure 2 highlights a typical CNN, produced by IBM Cloud Education (2020). These hidden layers within the CNN usually consist of an ReLU , pooling, convolutional, normalization, fully connected and a SoftMax layer (Uddin et al. 2021). The pooling layers help increase performance output, by reducing the spatial dimensions and avoiding overfitting. A Rectified Linear Unit Layer (ReLU) is important as it ensures nonlinearity as our data progress through each subsequent layer (Low et al. 2021). The normalization layer is also used to avoid overfitting whilst maintaining a high degree of accuracy when classifying data (Rosebrock 2021). Once the data reaches the Fully Connected layer, classification of the features is performed, however, we require it to pass through the final SoftMax layer before we can produce an output. In our program, The SoftMax layer acts as a translator, it will output a percentage based on the values through the CNN. (Wood n.d.)  It will predict what it believes is a COVID-19 positive patient. Figure 3 highlights a simplified CNN, starting from the input Chest X-ray image, to the output of whether a patient is COVID-19 positive or not to the user.

It will predict what it believes is a COVID-19 positive patient. Figure 3 highlights a simplified CNN, starting from the input Chest X-ray image, to the output of whether a patient is COVID-19 positive or not to the user.

The program would not be limited to only classifying COVID-19 patients from healthy patients. By applying a similar algorithm to a different dataset of lung images, the machine could also classify other lung pathologies such as Pneumonia or cancer.

Tools and Technologies

There are several open sources tools that would assist in the project; however, we would be aiming to train our CNN using TensorFlow coupled with Keras. Since training CNNs require large computational and memory resources, we would require multiple workstations with multi-core processors, rather than single-core processors. Furthermore, to effectively train the CNN dataset to distinguish between a Normal Chest X-ray and someone with COVID-19 (Or another pathology), we would require a huge dataset of Chest X-ray images, for healthy and diseased. These datasets would be accessed from publicly available websites. There is a myriad of available datasets online of Chest X-rays, “MIMIC-CXR” is one of these, a very large dataset that contains 227,835 imaging studies for 65,379 patients (Johnson et al. 2019). Another dataset that could be included is the NIH Chest X-ray dataset, with over 100,000 de-identified images of Chest X-rays (NIH Clinical Center 2017).

Skills Required

As we require TensorFlow with Keras, such models would need to be implemented in Python, as Keras provides us with a Python interface. During the supervised phase of machine learning, we would require several data scientists and engineers working with radiologists. The radiologists would be required to assist in the training phase, as to give input to the engineers when the program is classifying a section of the image as COVID-19 or when it is a false positive sign. However, radiologists are quite often very busy with reporting duties and interventional procedures. Hence, it may be difficult to find multiple radiologists to spend an adequate amount of time with the data scientists and engineers when developing the program. As Keras and TensorFlow are both open-source software, it would be very easy to access it for the program. Programming the machine to attain a very high accuracy may take a couple of months, as we want time to troubleshoot our code and work with the radiologists/engineers to eliminate any margin of error where possible.

Outcome

If the project is successful, it would help doctors in treating COVID-19 patients, whilst lessening the burden on the healthcare system. It could be used anywhere in the world, but ideally would be in countries where the healthcare system is already struggling. As COVID-19 continues to mutate and devastate health systems, the ability to instantly and accurately recognize COVID-19 infected patients would also lead to better patient prognosis. It should be noted that whilst multiple machine learning algorithms outperform human doctors when diagnosing COVID-19, several published papers have studied the processes used by CNNs. They found that CNN based more of their classifications outside of the lung area, which suggested the machine was performing shortcut learning (López-Cabrera et al. 2021). Additionally, another study used images that removed the lungs on an image vs. keeping the lungs only with the CNN. It found that with the lungs removed, the CNN accuracy did not change much, but in some cases outperformed the CNN with the lungs only. (Sadre et al. 2021) This raises the question that these algorithms are using data outside of the region of interest (Lungs) when contributing to the output result. This program does not aim to replace doctors, rather, provide them a supplementary feature when triaging patients.